A/B Testing Email Subject Lines

A/B testing allows you to test different subject line variations to optimize your email open rates. The system automatically sends different subject lines to a portion of your audience, measures performance, and then sends the winning variation to the remaining recipients.

How A/B Testing Works

- Create Variations: Add multiple subject line variations to test against your original subject

- Test Phase: A percentage of your audience receives different variations

- Winner Selection: The system automatically selects the best performing variation

- Final Send: The winning subject line is sent to the remaining recipients

Setting Up A/B Testing

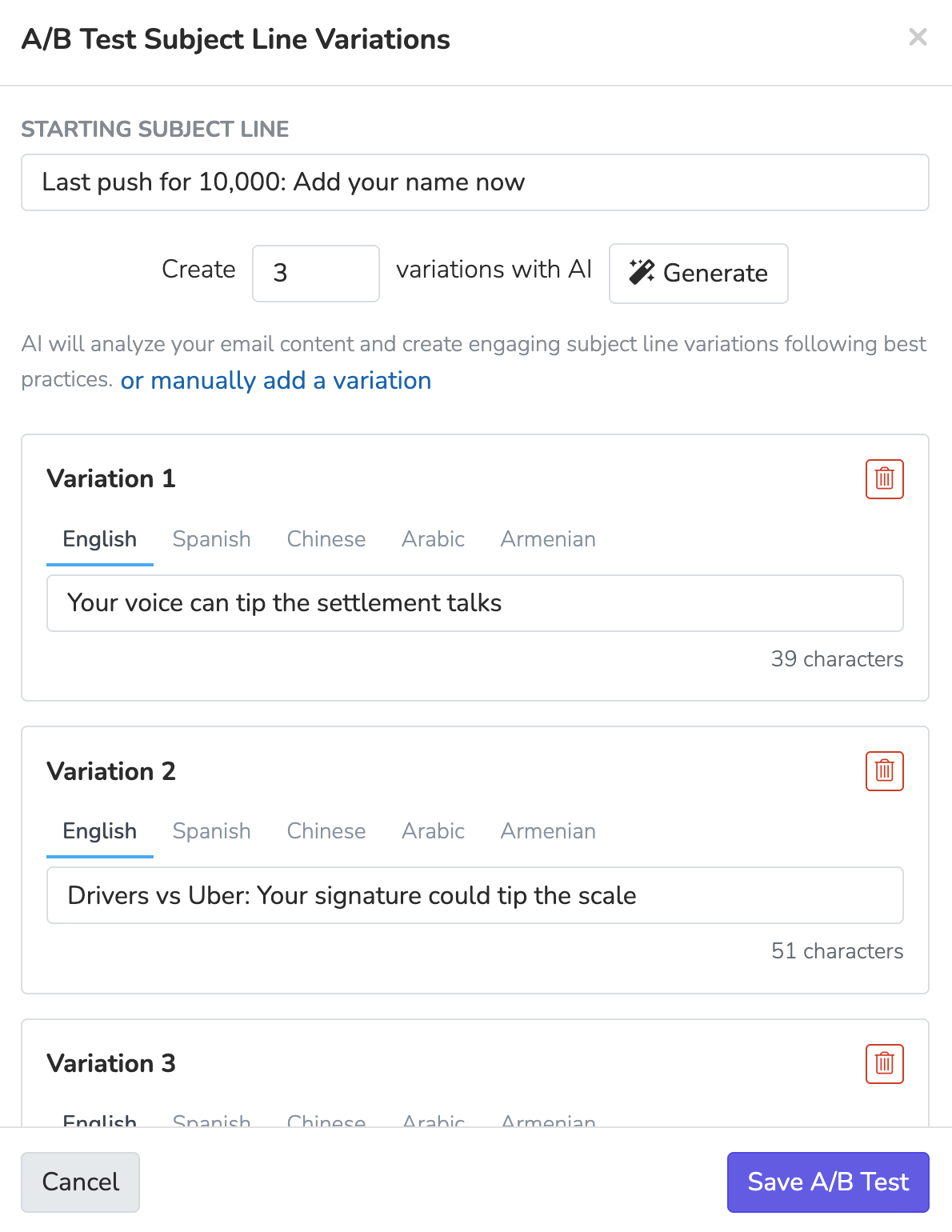

Adding Subject Line Variations

- Navigate to the Subject section of your email blast

- Click the A/B Test Variations button

- Use AI generation or manually create up to 8 subject line variations

- Save your A/B test configuration

AI Generation: The system can automatically generate subject line variations based on your email content and best practices. Simply specify how many variations you want (1-8) and click "Generate".

Manual Creation: Click "manually add a variation" to create custom subject lines for testing.

A/B Testing Settings

Configure your A/B test parameters in the Settings section:

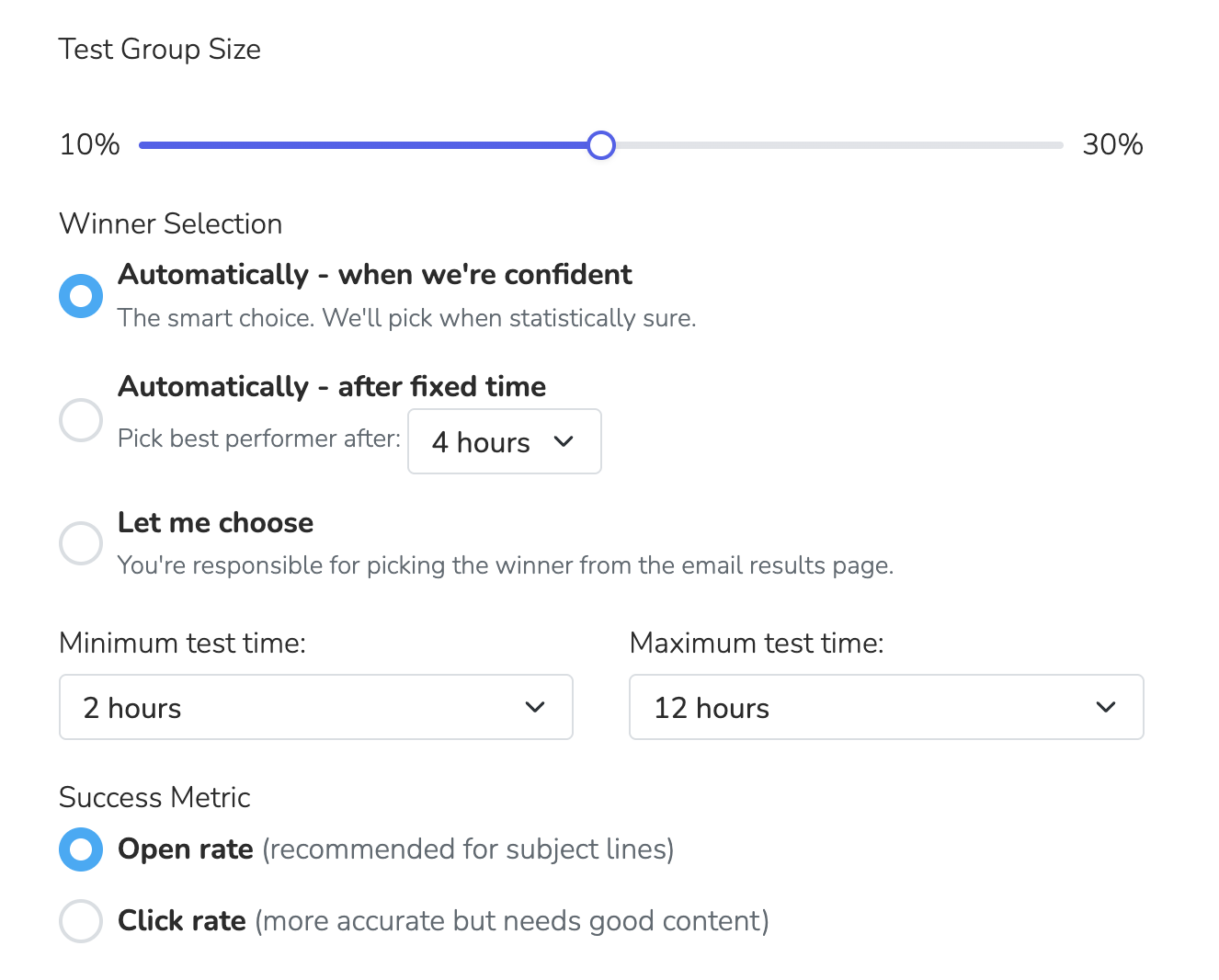

Test Group Size

- Range: 10% - 30% of your total audience

- Default: 20%

- Purpose: Determines what percentage of recipients will participate in the A/B test

Winner Selection Methods

Automatically - when we're confident (Recommended)

- The smart choice for most campaigns

- Uses statistical analysis to determine when there's a clear winner

- Automatically selects winner when statistically confident

- Minimum test time: 1-4 hours (default: 2 hours)

- Maximum test time: 12-48 hours (default: 12 hours)

Automatically - after fixed time

- Selects the best performer after a predetermined time period

- Options: 2, 4, 8, 12, or 24 hours

- Default: 4 hours

Let me choose

- You manually select the winning variation from the results page

- Best for campaigns where you want full control over the selection

Success Metrics

Open Rate (Recommended for subject lines)

- Measures how many recipients opened the email

- Best metric for testing subject line effectiveness

Click Rate

- Measures how many recipients clicked links in the email

- More accurate but requires engaging email content

Billing Plan Requirements

A/B testing is available on the Standard plan and above. If you're on a lower tier plan, the A/B Test Variations button will be disabled with a tooltip explaining the upgrade requirement.

A/B Testing Process

Phase 1: Test Sending

- The system sends different subject line variations to your test group

- Each variation is sent to an equal portion of the test audience

- Status shows as "Sending A/B Test" during this phase

Phase 2: Analysis & Winner Selection

- The system analyzes performance metrics (open rate or click rate)

- Winner selection happens based on your chosen method:

- Auto-confident: Uses statistical significance testing

- Fixed time: Waits for the specified time period

- Manual: Waits for your selection

Business Hours Protection

Important: Winner selection only occurs during business hours (10 AM - 5 PM) in your organization's timezone to ensure emails are sent at appropriate times. If the system is ready to select a winner outside business hours, it will wait until the next business day to make the selection and send the winning variation.

This prevents your email blasts from being sent to recipients during off-hours, even if the A/B test analysis is complete.

Phase 3: Final Send

- The winning variation is sent to all remaining recipients

- Status shows as "Sending Winner" during this phase

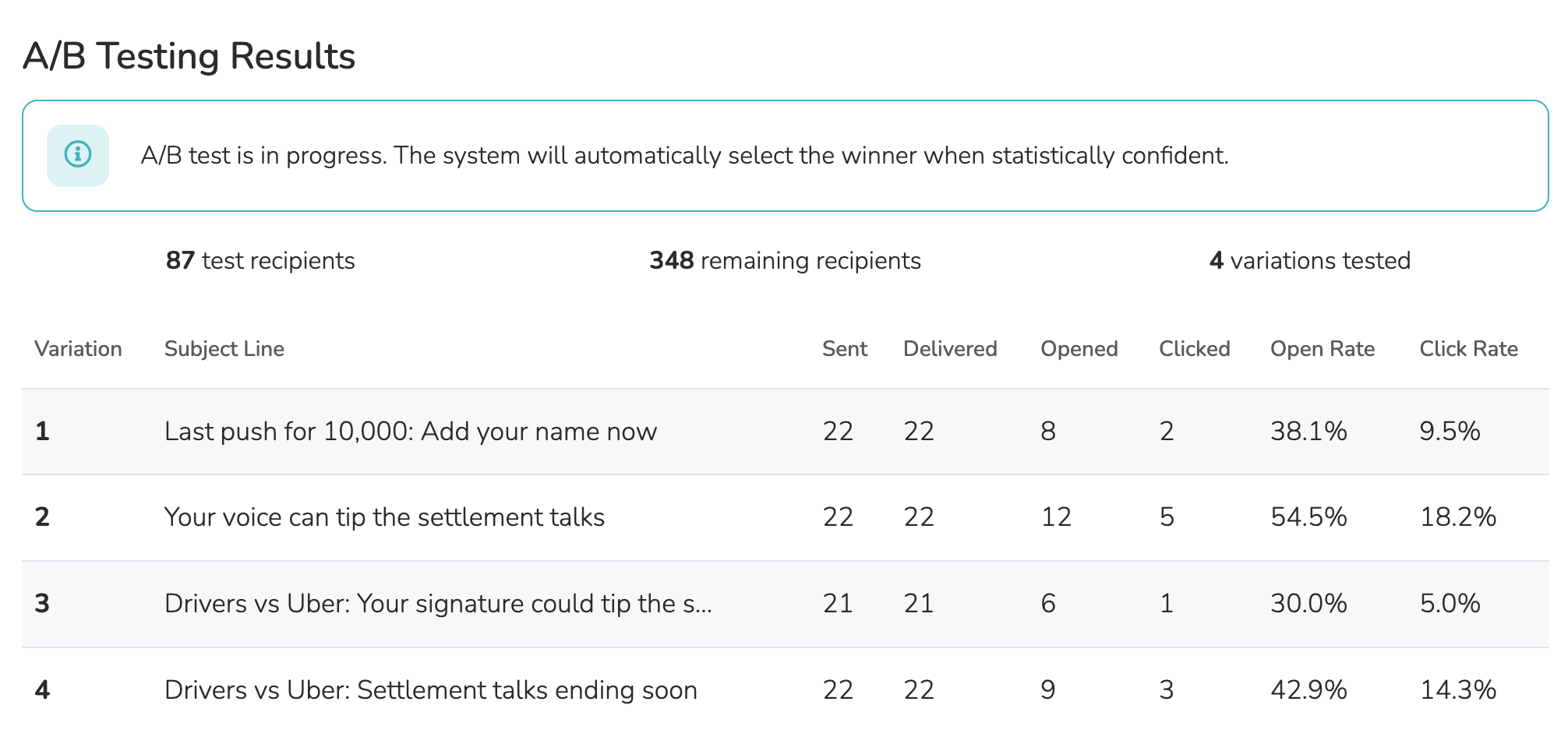

Viewing A/B Testing Results

The results page shows detailed performance metrics for each variation:

- Subject Line: The actual subject text tested

- Sent: Number of emails sent for this variation

- Delivered: Number of emails successfully delivered

- Opened: Number of recipients who opened the email

- Clicked: Number of recipients who clicked links

- Open Rate: Percentage of delivered emails that were opened

- Click Rate: Percentage of delivered emails that were clicked

Status Indicators

Your email blast will show different status badges during A/B testing:

- Draft: Email not yet sent, A/B test configured

- Sending A/B Test: Currently sending test variations

- A/B Testing: Test variations sent, analyzing results

- Awaiting Winner: Manual selection required

- Sending Winner: Sending winning variation to remaining recipients

- Sent: A/B test completed and all emails delivered

Best Practices

Subject Line Variations

- Test significantly different approaches (urgency vs. curiosity, long vs. short)

- Avoid testing minor changes like punctuation

- Consider your audience and brand voice

- Test emotional triggers, personalization, or urgency

Test Size Guidelines

- Small lists (< 1,000): Use 20-30% test size for meaningful results

- Medium lists (1,000-10,000): Use 15-25% test size

- Large lists (> 10,000): Use 10-20% test size

Timing Considerations

- Allow enough time for meaningful data collection

- Consider your audience's email checking patterns

- Avoid testing during holidays or unusual sending times

Statistical Confidence

When using "Automatically - when we're confident" winner selection, the system uses statistical analysis to ensure reliable results:

- Minimum sample size: Adapts based on your audience size

- Confidence levels: 85% for small audiences, 95% for larger audiences

- Meaningful difference: Requires minimum improvement thresholds

- Time limits: Prevents indefinite testing with maximum time constraints

Limitations

- Maximum of 8 subject line variations per email blast

- A/B testing cannot be modified once the email has been sent

- Test results are only meaningful with sufficient sample sizes

- Requires email tracking to be enabled (automatically enabled for A/B tests)

Troubleshooting

A/B Test button is disabled

- Check that your billing plan includes A/B testing features

- Upgrade to Standard plan or higher to access A/B testing

No clear winner selected

- Increase test group size for more data

- Extend maximum test time

- Consider manual winner selection

- Ensure subject line variations are sufficiently different

Low open rates across all variations

- Review your email sender reputation

- Check if emails are landing in spam folders

- Consider your sending time and frequency

- Review your email list quality

Updated 2 months ago